How many times have you gone to karaoke or heard someone sing along with a song on the radio only to sing the wrong lyrics? Don’t be ashamed, it’s more common than you think. When I first heard Elton John’s Tiny Dancer, without knowing the song’s title, I wondered about the lyric ‘hold me closer, Tony Danza’. Danza is a famous Italian-American actor and former boxer, but I was confused why this song was about him. Years later was I told that the offending lyric was “hold me closer tiny dancer”, and from that point on, that is what I hear.

The same process occurs when we speak to someone with a heavy accent. The brain will hear a message that is incomprehensible and make assumptions. Once the proper message is discovered the brain retunes itself, and the message will pop out. Neuroscientists at UC Berkeley are studying this phenomenon by using auditory stimuli while monitoring neural activity.

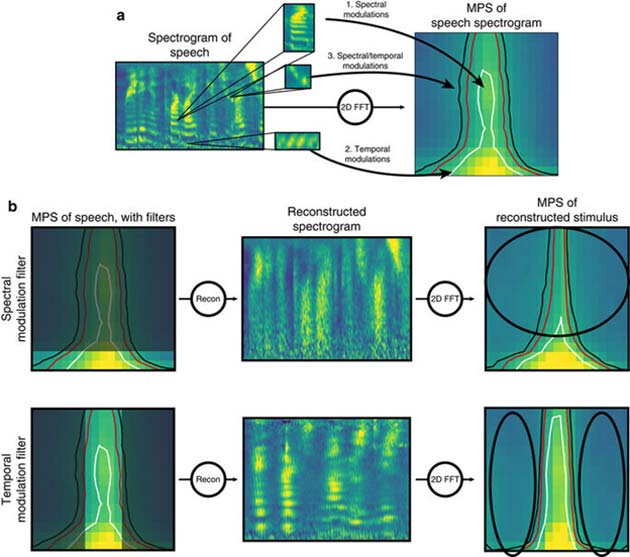

(a) The MPS describes the oscillatory patterns present in a time-frequency representation of sound. Left, the spectrogram of unfiltered speech is shown. Right, the MPS (calculated from a 2D FFT) is shown. Patterns in the spectrogram are reflected as power in temporal or spectral axes of the MPS. Rapid spectral fluctuations (for example, harmonic stacks from pitch, (1)) are represented near the middle/top of the MPS. Rapid temporal fluctuations (for example, plosives, (2)) are represented near the bottom/sides of the MPS. Joint spectral/temporal fluctuations (for example, rising pitch and phoneme changes, (3)) are represented in the upper corners of the MPS. (b) Left column: Filtered speech was created by filtering either spectral (top) or temporal (bottom) regions of the MPS space. MIDDLE column: spectrograms of the resulting filtered speech is shown. Right column: re-calculating the MPS on the filtered speech spectrogram shows that the MPS is now lacking power in the filtered regions. (Nature)

Pop Outs

Pop outs occur all the time, when learning languages, hearing conversations in noisy environments, or visually seeing objects in images after they have been pointed out. Though this has been reported anecdotally, the Berkeley team presents data that demonstrate the process. What they found was that the brain is incredibly quick at reorganizing the signals in response to new information.

Working with epilepsy patients that had implanted electrode arrays, the volunteers were asked to listen to recordings that were garbled and to type out those words they understood. They were then given an unfiltered recording followed by a filtered recording and asked to do the same. The volunteers were compared to those subjects that never received the unfiltered recording. After hearing the intact sentences, the subjects could understand the garbled message that was previously unintelligible.

The researchers simultaneously recorded the brain’s electrical response to the messages throughout the experiment. When the messages were played with noise, the brain activity showed that the messages were heard, but was incomprehensible. When a clear message was played, the activity in the brain was consistent with it tuning towards language. When the garbled message was played a second time, the brains showed language-associated neuronal activity. The response to the garbled message was indicative of the brain deciphering normal speech. The researchers describe that the brain takes in a lot of information that it is unable to process. By picking and choosing pertinent information, the brain can discard the rest. The brain does this by making assumptions and restricting the noise and the brain does this quickly. It is not a simple increase or decrease in activity, rather a change in the receptive fields of the brain.

Decoding Speech

The Berkeley team has set their sights on using what they discovered to develop a speech decoder. There are many diseases that affect speech like Huntington’s disease or Lou Gehrig’s disease. These afflictions often cause a loss of motor control. With a decoder, patients would still be able to communicate without the need to use their voice.

Top image: Soundwave (Public Domain)

No comment