We are currently in a shortage crisis. When you say this, many people think of oil and coal, but they can be replaced with renewable resources like wind and solar power. Akin to the current shortages in helium, medical isotopes, and clean water, the next crisis on the horizon is data storage.

Evolving Science has touched on this subject before. Humans are producing more data now than ever before. From Mars rovers to Facebook, it’s thought that we produce 2.5 quintillion bytes of data every day. A quintillion has 18 zeros following it; or 10 million blue-ray DVDs the size of the Eiffel Tower, and that was the estimate in 2015. Our current solution is of course to build more storage. China’s Langfang and the US’ SuperNAP facilities are the current world leaders in big data.

For big data, building new facilities is a stop-gap measure. What is really needed is an improved method of data storage, and many research groups have been investigating methods for storing digital data on DNA. Since DNA effectively stores the data for all life on Earth, why not store our photographs or favorite books on it as well? To give an example of DNAs utility, if you take all the genes that make up a human being and translate that into bits, a human could be stored on 1.5 GB. All the complexity and variation of being you can be stored on a thumb drive.

Maximizing DNA

Researchers from Columbia University and the New York Genome Center are working on an algorithm to maximize the storage potential of DNA. Firstly, DNA is a robust biomolecule. It is very stable and can last for thousands of years. DNA was recovered from the bones of a 430,000-year-old distant human ancestor in Spain. DNA doesn’t degrade over time, unlike current media like cassettes, DVDs, or computer hard drives. This extended shelf-life also means that DNA storage won’t become obsolete.

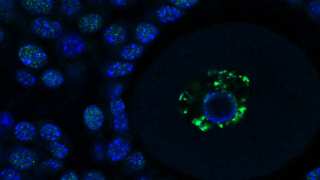

Instead of trying to convert an entire digital file into a long sequence of DNA, the researchers used fountain codes to generate small digital packets called droplets that could then be converted into DNA segments. The process begins by breaking the data up into short segments of four bits. The data is further processed by series of mathematic expressions called a Luby transformation to give the droplet of six-bits. Each set of bits (00, 01, 10 and 11) corresponds to a nucleotide base (Adenine, Cytosine, Guanine, and Thymine). So, a six-bit sequence of 001011 would create the oligonucleotide AGT.

From here, the sequences are checked to make sure they are valid; repeat sequences (TTT) and high GC sequences (GGG, CCC, GCG, CGC) are disregarded and the sequence returned to the Luby transformation until a valid sequence is generated. Once the droplets have been screened and approved the oligonucleotide sequences were synthesized. For this experiment, the team made an archive file that contained an Amazon gift card, an operating system, an image, a pdf file, a computer virus and a French film from 1895; a total of 2.1 MB. The end result was 72,000 oligonucleotide strands of DNA, each 200 base pairs long.

To retrieve their files, the team used standard sequencing technologies. Software was developed to take the sequenced genetic code and converted it back into a binary code. Some of the nucleotides within the master pool carry redundant information to limit errors, so not all of them are needed for decoding. Once decoded, the archive was reconstituted and opened, revealing perfect recreations of the files.

Trouble with DNA

There are some caveats to working with DNA. Due to the physics that hold double stranded DNA together, some sequences are not preferred and the Luby re-transformations are needed. If a sequence has too much guanine or cytosine, the strands will not separate; conversely, too much adenine or thymine and the strands will fall apart.

DNA structure. (Public Domain)

Also, in order to decode the DNA, a small portion of DNA from the master pool is used up in the process. The pool can be copied with standard PCR biochemistry, but this introduces errors in the sequences. There is also a time factor involved in DNA storage. The researcher found that encoding the binary file into DNA oligos took 2.5 minutes and decoding of oligos took 9 minutes. This does not include the time needed to synthesize the DNA or to sequence it, which could take days.

DNA advantages

There are advantages to DNA storage, even if it takes time. Archived data could be stored indefinitely on DNA in cold rooms. Also, the amount of data that can be stored is phenomenal; the method employed could store 2.1 MB on 10 picograms of DNA, potentially allowing for 215 petabytes of data stored per gram of DNA. What can fit in 215 Pb of data? All of the world’s music for the past 2000 years and more. Or, fittingly, you can save all of the DNA sequences of every person in the United States, as well as their clones, twice.

Top image: Server room (Public Domain)Dna structure (CC BY-SA 4.0).

No comment