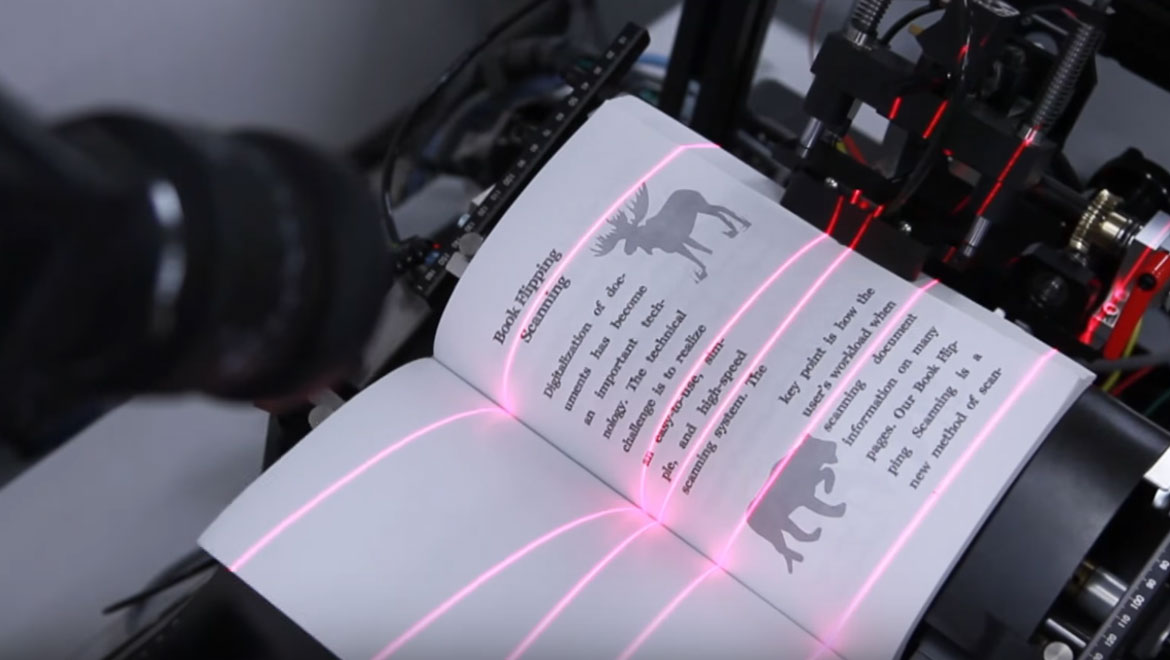

Computers have been instrumental at automating repetitive or mundane processes. Scanning and digitizing whole books have gone down from hours to minutes. For us, it is unfathomable to live in a time without computers and a lot of effort is put forth to extend their utility into every aspect of work and play.

One of the newest advances being investigated is computer-generated captions. Researchers from the University of Rochester teamed up with computer scientists from Adobe to develop computer-generated image caption software. Some will ask, is automated captioning needed? It is estimated that in 2016 alone, 1.1 trillion photos were taken worldwide from digital cameras, tablets, and mobile phones. Everyone wants to preserve and share their memories, but labeling and organizing each individual photo is time-consuming.

The problem with generating a meaningful caption is that it requires a high level of image understanding. Computers are good at object detection and classification, but taking an unknown image and interpreting the content requires a level of sophistication that is not yet known.

Words have meanings

The Rochester/Adobe system takes a unique approach at picture captioning. The competing systems take one of two approaches to developing their captions. In a top-down approach, the gist or vague description of the image is generated and converted into a summary. This is the state-of-the-art method but has the limitation that it often misses the finer details that may be important in the image. In a bottom-up approach, short descriptors are compiled from an image and cobbled together to build a sentence. Unlike the top-down approach, the bottom-up can operate at any resolution, pulling out descriptors freely, but lacks the end-to-end formulation. The Rochester/Adobe team uses a model that mixes both approaches and takes the meanings of the words into account when formulating the caption.

Other groups have also tried to use a mixed approach, but their systems were visual attention-centric. This means that they weigh portions of the image as being visually more important than other parts. Though visual attention plays an important role in language, people will not describe every aspect of an image; instead they use key semantic choices of the important regions of the image.

The Rochester/Adobe team developed a semantic attention system to provide a highly detailed, coherent description of images. Their definition of semantic is the ability to provide a detailed, coherent description of important objects. Their model assigns semantically relevant regions in the image, then weighs the relative strengths of attention for multiple regions, and finally switches attention between regions dynamically if needed.

Operation

First, the system extracts top-down and bottom-up features using a Convolutional Neural Network (CNN) to build global descriptors. A set of attribute descriptors {A} is also acquired. All of the visual data is then fed into a Recurrent Neural Network (RNN). The information from global and attribute {A} descriptors act as guides as the RNN begins to generate the caption in an evolving process. In this method, the CNN inputs global to provide an overview of the image while the RNN incorporates the attributes {A} in an evolving process. The system is dependent on the accurate prediction of visual attributes {A}.

Performance

The Rochester/Adobe team submitted their system to Microsoft’s COCO and Flickr30K to evaluate the performance on more than 150,000 images. Teams from Google, Microsoft, Stanford, University of Toronto, and other institutions also competed in the challenge. Each image was given five captions to be judged. The team notes that their system yielded a better performance than the competitors in all benchmarks. The real benefit to their system is to seamlessly fuse global and local information to produce a superior caption.

Top image: BFS-Auto: High Speed & High Definition Book Scanner (YouTube)

No comment