Using neuroscience to assess the perceptions of others in more and more direct ways may seem possible, but only in the realms of science fiction.

But now, a team of Canadian researchers have brought such technology closer to reality through the conversion of visual data from human participants into corresponding images on a screen.

Facial processing and visual data

This interesting new technique uses electroencephalography, a common form of brain-activity processing. The scientists behind the study favoured it above newer methods such as magnetic resonance imaging (MRI) as it captured the relevant ‘brainwaves’ at a higher rate, thus allowing for higher-quality images.

This technique is intended to develop the study of facial processing in humans, but could also be turned to other ends in the future.

There is a lot of research and data on facial processing, or how human brains perceive and analyse faces. As this is obviously important, most notably for social functioning, it is controlled by extensive brain activity that may be superior to that of other animals.

The human understanding of facial recognition is, however, mainly focused on the amount of time it takes to assess or recall a face. However, less is known about how the process of facial identity ‘fits together’, for instance if faces are initially ‘scanned’ in terms of shape, features or familiarity.

The small number of studies in this area has found that facial processing is indeed associated with distinct intervals over time, and that this activity is associated with the fusiform gyrus of the brain Finding out would be greatly enhanced if researchers could somehow visualise how the brain ‘reconstructs’ a facial image within the brain.

Working out how the brain recognizes faces

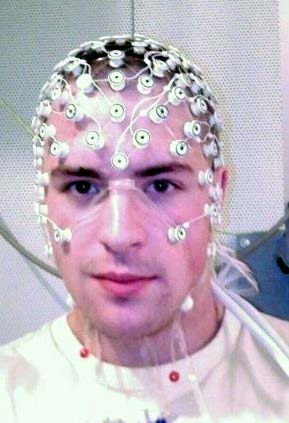

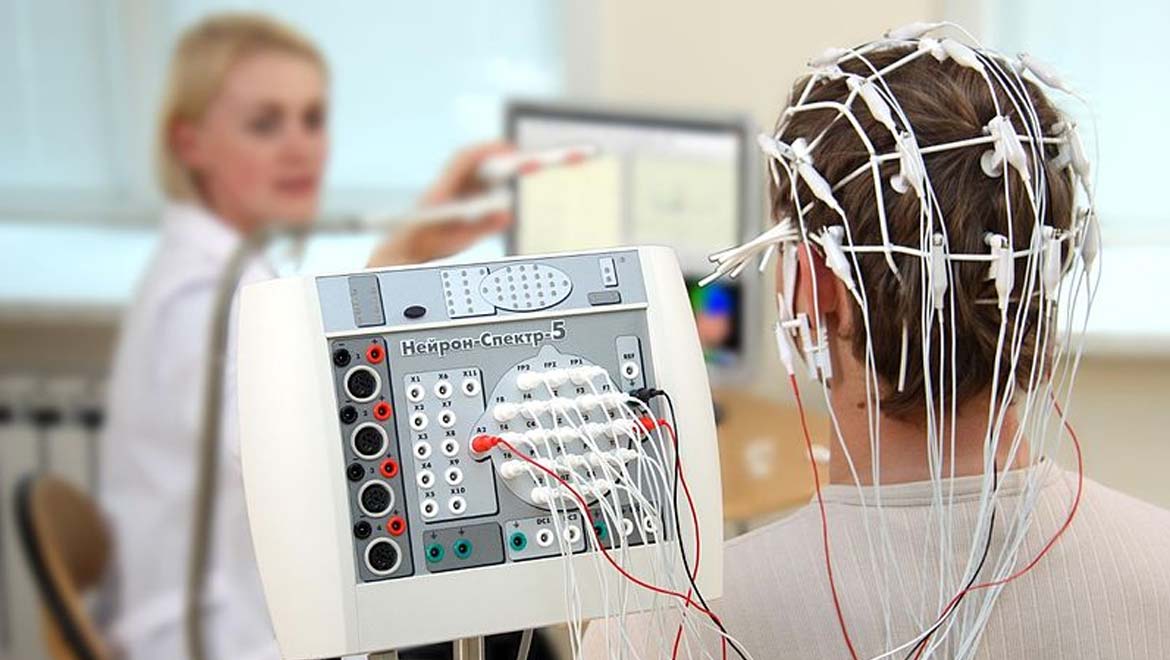

Doing so may also inform the study of face-memory and neurological facial expression processing. However, most researchers today make do with EEG data, which is picked up through the skull via an electrode array typically worn as a cap by a human participant.

This data is converted into graphical representations, which is then analysed in terms of the activity represented in the brain regions of interest. EEG can also record this activity over time. It cannot, however, visualise the brain directly as other techniques such as functional MRI can.

Studies on facial recognition and processing using this modality has found, for example, that specific brain regions are responsible for the recognition of certain shapes as faces, and that the same may have a role in our ability to ‘see’ face-like imagery in non-face items. However, they have not taken how this process unfolds over time into account, or how other data such as expression are accounted for within it.

In addition, fMRI tends to capture brain-imaging data in a time-scale close to seconds, whereas EEG can record in terms of milliseconds. Therefore, EEG may be capable of capturing higher-quality data in higher resolutions. This may be why a team of researchers at the University of Toronto’s Department of Psychology chose it in their new study.

An EEG recording setup. (Public Domain)

This study offered evidence that the EEG patterns associated with facial patterns are divided into distinct stages: distinguishing between familiar and non-familiar faces; spatial processing of the facial data; individual feature reconstruction and whole-face reconstruction.

The volunteers in this study were six male and six female students from the university aged from 18 to 27 years. All of these participants had normal vision and ‘normal’ face-memory and visual-processing abilities, as assessed using the standard Cambridge Face Memory and Vividness of Visual Imagery Questionnaire II tests respectively.

They were exposed to 70 faces with a ‘happy’ expression and 70 with a ‘neutral’ expression, so as to correct for different facial expressions and their perception. 108 of this total face-image pool were selected randomly from different databases, and the remainder were those of celebrities. The images were standardised for gender and ethnicity, although 10 female faces were also included to assess the ability to recognise binary images.

The images were also standardised for face size, background, proportions and contrast, so as to eliminate ‘irrelevant’ activity on EEGs relating to additional details. This data was captured using a 64-electrode Biosemi™ system with the standard 10/20 electrode-placement. The data was corrected for binary detections and false positives, processed and analysed using standard tools that included MATLAB.

This enabled the team at Toronto to elucidate the distinct stages of facial processing over time. It also validated the participants’ ability to identify a face regardless of expression (which is known as expression-invariant processing). It also enabled them to reconstruct the EEG data into the images as perceived by the participants.

This technique had already been developed for fMRI data, which converted spatial face data into corresponding images. It is based on the conversion of feature-specific data into relevant image fragments. This process, known as reverse correlation-based machine learning, generates ‘classification images’, which are combined to give a representation of the complete facial space.

Faces reconstructed using this technique can be composed of as many as 20 separate classification images. This enabled the team to map faces in terms of their expression based on the brain activity specifically associated with the same, unlike the fMRI-based version. Therefore, the reconstructed images depended on differential amounts of space taken up by the two expression types included in the study. The team found that these images could be generated with an overall accuracy of nearly 70% for happy faces and 64% for neutral faces.

This study provided evidence for a number of new insights on human facial-image processing, most notably that it is done in visual terms rather than semantic. Furthermore, EEG data could be converted into computer-generated ‘point-of-view’ images, with a reasonably high level of accuracy regardless of factors such as differential expressions on faces in view.

The team found that it took about 50 to 650 milliseconds for a participant’s brain activity to generate such an image. This technique, therefore, was perceived as more effective when applied to EEG compared to MRI. Therefore, it may be integrated into future work on facial recognition or recall in human participants.

In addition, such technology may be integrated into relevant interests and applications, such as the increasingly accurate reconstruction of suspect images in law enforcement. It may also enhance the study of conditions in some humans that impact negatively on the ability to recognise or retain faces. This technique may also unlock the ability to recreate the perception of other sensory information, which may in turn lead to more sophisticated and immersive artificial environments (such as virtual- or augmented-reality products) one day.

Top image: The process of recording electroencephalography (CC BY-SA 4.0)

References

Nemrodov D, Niemeier M, Patel A, Nestor A. The Neural Dynamics of Facial Identity Processing: insights from EEG-Based Pattern Analysis and Image Reconstruction. eneuro. 2018.

Nestor A, Plaut DC, Behrmann M. Unraveling the distributed neural code of facial identity through spatiotemporal pattern analysis. Proc Natl Acad Sci U S A. 2011;108(24):9998-10003.

Sormaz M, Young AW, Andrews TJ. Contributions of feature shapes and surface cues to the recognition of facial expressions. Vision Research. 2016;127:1-10.

No comment