The social media company, Facebook, appears to want to identify all of its users in available photos. This is what can be understood from the organization's current drive to research AIs and programs that work on the images of faces to remove errors and unwanted features.

The newest example of this technology can even change pictures of people with closed eyes to those with open eyes. The reason for this advancement is still not clear, but it could be to help users who post images of themselves to look their best.

However, the ever-increasing efficacy of this kind of algorithm may worry those concerned with privacy online, online content in general, and the veracity of it.

Machine Learning and Selfies

Examples of algorithms that can correct pictures include deep convolutional networks (DNNs). They are the tools that people or programs can use to correct and enhance facial images for the best possible appearance. These corrections include processes such as blemish removal, skin-tone editing, facial re-angling and re-proportioning.

This kind of algorithm is becoming increasingly popular and can even be found in the selfie-cameras of many contemporary smartphone models (e.g., the ‘Beauty Mode’ that can be found in Asus and Huawei flagships).

However, it now appears that the capabilities of these algorithms have extended to the transfer of features contained in one selfie to another that lacks them. This could include altering the eyes that are open in one picture and using it to correct another picture in which the same eyes are closed.

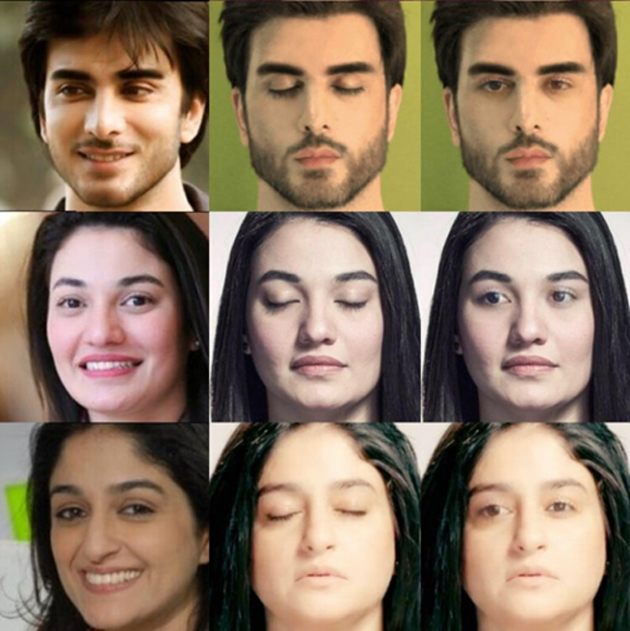

Facebook designs algorithm that can enable users to take better selfies, i.e., photographs with closed eyes can be converted to ones with open eyes. (Source: Facebook)

Such an occurrence can be achieved by going beyond the DNN level to another, the generative adversarial network (GAN).

GAN algorithms can evaluate and correct facial images, as a DNN can. However, the former can also ‘learn’ the various component features of selfies in order to correct the next one it comes across.

Some GANs may also be able to construct new or better images based on what the learnings. For example, the system may be able to extrapolate a missing feature based on what it has ‘seen’ before. This process, known as in-painting, is necessary to insert open eyes into a closed-eyes image or blinking selfie.

GAN is the Photo-Editing Tool

The GAN can in-paint in an exceptionally convincing way, matching the newly-opened eyes to the lighting and directional information available in the selfie to be corrected. Therefore, the resulting processed image is believed to appear as natural, plausible and realistic as possible.

The alternative is a picture with eyes that look as though they have simply been copied and pasted, roughly, in the appropriate location.

The information that makes all of this possible is known as exemplars, giving rise to the term exemplar-GAN or exGAN to the newest iteration of this technology.

The development of the exGAN was showcased in a research release published by Brian Dolhansky and Cristian Canton Ferrer of the Facebook corporation.

In this paper, the individuals have demonstrated how the information in one selfie can be used to apply ‘eye-opening’ exGANs to another selfie of the same person with their eyes closed. The researchers used exGANs that learned from one reference selfie as a whole, in addition to others that focused more on the actual eyes in question, and how best to fit them into new, ‘eyeless’ images.

Ferrer and Dolhansky then applied the best-performing exGANs to a database with thousands of different and distinct facial images. The results were validated, in turn, with a second database containing about 17,000 celebrity selfies.

The researchers claimed that the resulting images in which the exGANs corrected closed eyes were able to convince a group of volunteers 54% of the time. These participants were shown the reference images and then asked to discern a second real image of the same face from an in-painted one. The participants picked the real image or reported uncertainty as to which one was the real image, for 46% of the total reference cohort.

Does exGAN Processing Have Limits?

Therefore, the researchers concluded that their new exGANs (of both reference and eye-coded types) were reasonably capable of in-painting the eyes of subject images.

On the other hand, they also reported that some of the exGANs - particularly those of the coded variety - exhibited low resolution in the eyes that they generated. However, the plausibility of these images, according to a metric of perceived quality called FID, still improved with each iteration (or epoch) of the affected exGANs.

In addition, the researchers observed that their new exGANs could still fail to in-paint in certain conditions. These included images in which one eye was occluded or the iris was improperly lit or highlighted.

Finally, the exGANs needed RGB images in order to get the various colored attributes of each in-painted object (e.g., iris color or skin tone) right.

This new algorithm is just one example of how technology has the potential to change reality, or rather how reality is reproduced online. Such advancements could possibly lead to scenarios where important recordings in various media could be altered, or fabricated entirely, to alter perceptions of events, people or places.

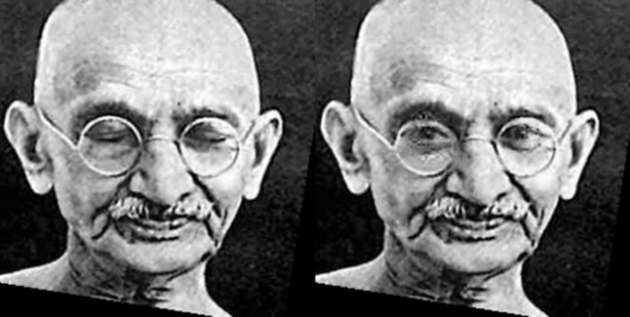

The technique was tested on an image of Mahatma Gandhi, and it was apparent that the presence of his spectacles did not significantly interfere with the algorithm. (Source: Facebook)

The authors of this Facebook research paper have observed that the next steps for exGANs could include the ability to in-paint incomplete or missing features in images such as scenic photographs.

All in all, this feature could drive people to be much more skeptical and analytical when viewing content online in the future.

Top Image: Facebook may be able to correct nearly any kind of botched selfie, soon. (Source: pixhere.com)

References

Facebook can use AI to open your eyes in blinking selfies, 2018, The Verge, https://www.theverge.com/2018/6/19/17478142/facebook-ai-research-blink-selfie-photo-retouching , (accessed 24 Jun. 18)

B. Dolhansky, et al. (2018) Eye In-Painting with Exemplar Generative Adversarial Networks. Facebook Research.

H. M., et al. (2017) GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. ArXiv e-prints.

No comment