Recent research has found that the construction of logic gates from light is, in fact, possible. This has been done by ‘bouncing’ the light between one wavelength and another. Although physical media (nanowires, in the case of the logic gates) is necessary, it still has the potential to be much faster than traditional transistors.

Now, it appears that light can be used to build neural networks. The scientists behind this new breakthrough achieved this feat as a proof-of-concept, and also to demonstrate that alternative networks were possible and could shoulder the load of training. This stage is the most resource-intensive of neuromorphic computing as a whole.

The new, light-based method can, somewhat, alleviate the power requirements involved. On the other hand, it is still relatively primitive at this point.

How to Train your Neural Network

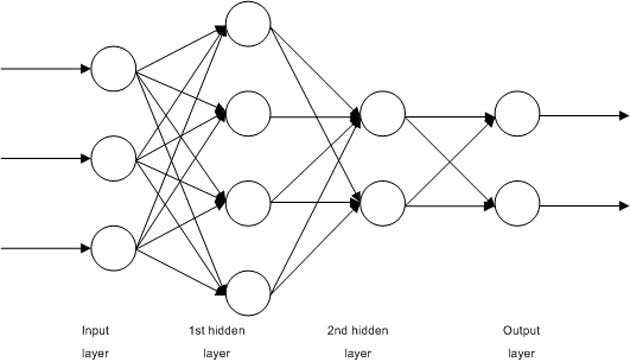

Neural networks function by sending ‘impulses’ from one sub-unit (or neuron) of the system to another, which reacts by sending it on to a subsequent neuron, and so on. This event is often done in parallel, with multiple different impulses, each of which may carry a small amount of data, inference or observation, so that the network might resemble a complex flowchart. The impulses may be transmitted and exchanged until they eventually converge on a limited number of neurons. This form of machined consensus may progress until as few as two neurons transmit their impulses to a final point.

The process represents a decision and is not too different from how biological neurons behave in a real-life brain.

The multiple connections between different neurons (depicted here as circuits) in a network eventually contribute to a more limited set of decisions, which contribute to the network’s output. (Source: Public Domain)

It follows that most of the neural activity goes into accepting, learning and preparing to apply the training imparted by the operator. The networking involved at this stage can enable a neural network to perform a range of tasks from object recognition to mathematical model generation. Once trained, a network may take mere milliseconds to make the highly accurate decisions required of it. However, this training can come at the expense of increased processing power, and energy requirements.

A team of scientists from the University of California (Los Angeles) decided to tackle this problem using the potential of light-based (or optical) computing.

The use of light to train a neural network would technically be independent of power requirements, while also conferring impressive speed (that of photonic movement) on transmission between neurons.

On the other hand, the UCLA researchers would also have had to develop their own analogs to traditional, silicon node-based neurons and the connections (sometimes known as artificial synapses) between them.

What’s Old is New Again: Refractive Material Makes Punch-Cards for Light

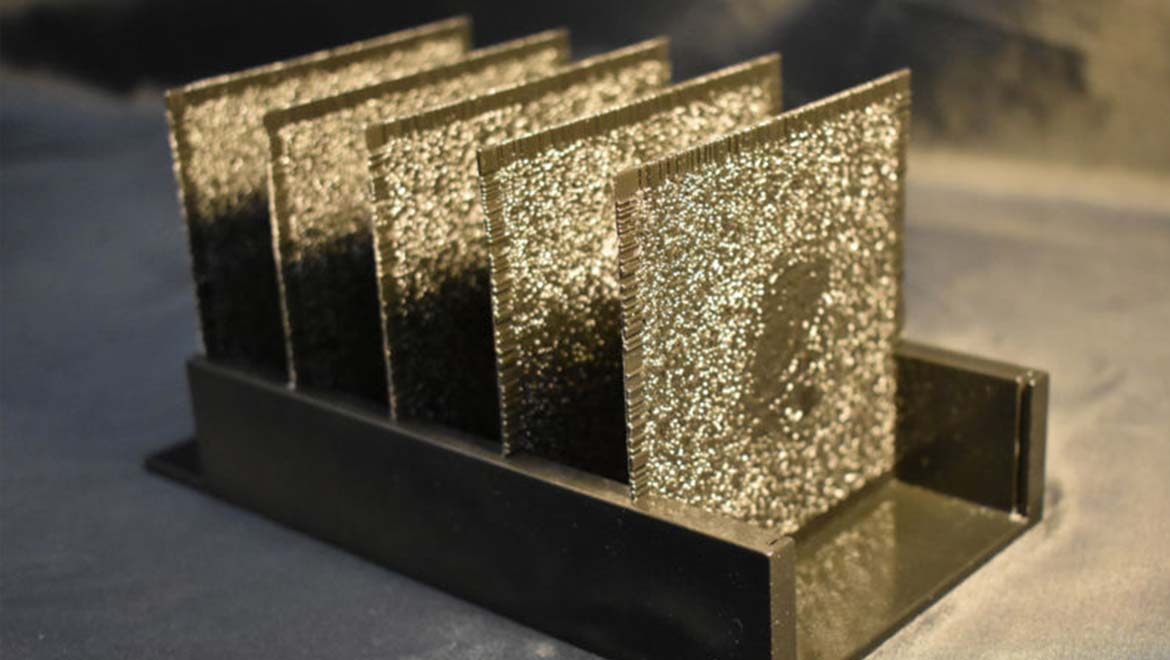

The team's work resulted in a thin sheet of material through which light could pass at specific angles (i.e., by diffraction) to meet a point in a subsequent sheet, and so on. In practice, the system almost resembled ancient, punch-card-type data-tech.

The light was refracted through the sheets towards eventual ‘decision’ points, which were picked up by a photodetector positioned to the rear of the sheet array. This neatly comprised the neurons and synapses. In addition, the initial input could be provided by simply blocking the light to certain points on the first sheet in this arrangement. The researchers reported that they could get this novel form of neural network to recognize objects via this protocol.

The physical sheet-based architecture, which can also be produced using a 3D printer, was termed a diffractive deep neural network (or D2NN) by its developers.

The physical objects, which consisted of numbers in typeface and items of clothing, were also 3D-printed for use in the ‘training’ stage of the network’s operation.

However, this met with issues for the network in both types of object-featured blank spaces, which the D2NN interpreted poorly.

To address it, the team reduced the resolution and the dimensional accuracy (e.g., the gaps found in a printed number nine would be partially obscured) of the objects to be learned. This decreased the accuracy and speed of the network’s computations.

Optical Computing and the Future

The UCLA scientists published a paper based on this work in the journal, Science.

The study may have proved that optical computing is possible (again) and that it may have an increased repertoire of applications. Therefore, it is possible that the future may hold many more examples of this 100% optical data-processing, or that it may become more integrated into conventional electronics.

Top Image: The 3D-printed sheets made up the physical form of the optical neural network. (Source: Ozcan Lab, UCLA)

References

Neural network implemented with light instead of electrons, 2018, Arstechnica, https://arstechnica.com/science/2018/07/neural-network-implemented-with-light-instead-of-electrons/ , (accessed 5 August 2018)

Light Computing: Nanowire-Based System Allows For Fully Optical Binary Functions, 2018, Evolving Science, https://www.evolving-science.com/information-communication/optical-binary-functions-00745 , (accessed 5 August 2018)

X. Lin, et al. (2018) All-optical machine learning using diffractive deep neural networks. Science.

No comment