How speech and semantics are handled in the brain are subjects that have been well-studied, the past few years. However, the specific neural activity associated with how words are understood has not been completely defined yet.

Neuroscientists have a good general idea of how this happens, but it is based on studies with very specific, experimental 'phrases' designed to assess how people separate semantically correct (or congruous) words from those that are not.

A new study has claimed to be able to detect such activity in response to more 'real-world' speech. This research could help define the brainwaves that are strongly associated with the ability to process and interpret the spoken word.

Determining the Brain’s Interpretation of Speech

Humans typically hear and understand speech at rates of up to 200 words per minute. Therefore, the neurological functions that underpin these abilities must be very rapid and may occur in a strongly time-coded fashion.

This has been quantified in the past using electroencephalography (EEG), a non-invasive scanning modality that can record real-time brain activity in terms of event-related potentials (ERPs).

This has further enabled some neurophysiological researchers to determine that verbal comprehension is related to the N400 band of ERP. However, this is based on studies in which the authors examined semantic similarity in their participants through the use of incongruous words inserted into custom-designed phrases.

Therefore, it is not clear if the EEG readings from these experiments have much to do with the real-world or natural understanding of speech.

The most recent study in this area investigated this speech in more natural conditions. This was done by analyzing the EEG readings associated with consuming an audiobook session in terms of the context of each word.

The technique was performed by detecting the N400 signals (previously associated with the semantic value) of the listeners and converting them into vectors for each individual word. The researchers used an appropriate algorithm called word2vec for the purpose.

These vectors, being correlated to a block of congruous, semantically-intact text being read out in a clear voice, were all in close proximity in a given theoretical space. Therefore, the researchers behind this study were able to show the text that was processed in the brain; in short, how the speech was being understood.

Using Vectors for Speech Analysis & Brain Activity

The researchers were also able to detect the semantics-based understanding of the text. They did this by comparing the vector assigned to each individual word to an average of all the words that came before it, in each sentence.

In the event the word began the next sentence, its vector was compared to the average vector of the preceding complete sentence. These averages were worked out using the Pearson technique of correlation to give semantic values. Each value was then subtracted from one. Therefore, each word had a semantic measure of between 0 and 2.

A time-specific vector was also created for each word-vector by comparing the onset-time of each word with the rate of the EEG recording (128Hz).

The above analysis was applied to the EEG recordings of 19 volunteers who listened to approximately 60 minutes' worth of audiobook playback, each. The recordings were taken from the parietal cortex (with which the N400 signal is associated and requires EEG electrode placement along the midline of the scalp) of each participant. This method resulted in significant readings.

The same audiobook recording was played in reverse to ten of the participants, which resulted in no significant readings from the same electrodes. This could suggest that the signals were more likely to be associated with semantic processing rather than a simple auditory stimulation.

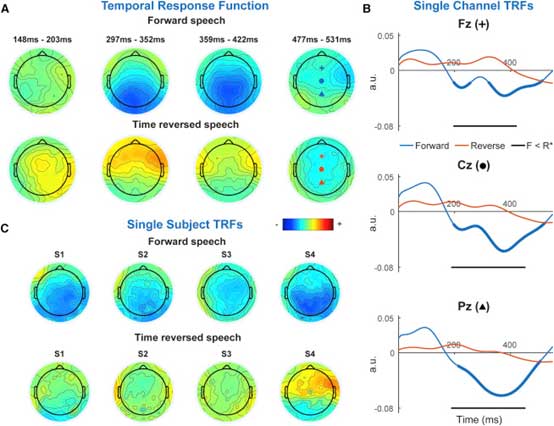

Figure showing difference in brain activity during forward (significant) and reverse (not significant) speech. (Source: Broderick, M. P. et al, 2018)

Observations from the Study

The relevant brain activity had a latency of between 200 and 500 milliseconds, which may indicate the time-coding involved in speech comprehension.

These numbers were also absent in the second part of the experiment, which may represent further evidence that the activity was associated with comprehension-related brain functions.

Additionally, the researchers conducted their analysis with a different dataset on 21 participants who listened to 15min segments of speech overlaid with white noise. As visual assistance has been shown to enhance the interpretation of intelligibility-impaired speech such as this, the participants had access to a video of the voice artist supplying one of the segments.

This inclusion had a significant effect on the participants' self-reported and objectively-measured abilities to understand the segments. The semantic EEG results associated with this test were also significantly affected by access to the video.

The EEG readings recorded in the third stage of the study exhibited an increased latency of 380 to 600 milliseconds, which may have corresponded to the additional difficulty of understanding speech through auditory interference.

The researchers then decided to apply their new model of semantic processing to the ‘cocktail party effect’. This is a phenomenon in which an individual may be able to hear two separate speech sources on either side of the head (dichotic speech sources) but only recall only one of them in detail.

The team tested this method in 33 participants who listened to two dichotic audiobooks. 16 of the participants gave their attention to one and 17 to the second. The participants were able to answer 80% of a set of questions on their 'attended' audiobooks correctly, whereas they got 29% of all questions on the other book right (the chance of getting any question right was 1 in 4).

There was a significant difference between the parietal EEGs associated with the attended books and those associated with the 'unattended' ones.

Conclusion

This study may have proven that the comprehension of speech, in terms of context and semantics, is associated with the N400 component of whole-brain EEG recordings. This component may be able to predict the presence of spoken-word comprehension in a given participant.

In summary, this work may have confirmed the neurological basis of speech comprehension in a specific and time-coded fashion.

In addition, the differences in N400 latency between the first and second stages of the study could be indicative that brain activity may also have a role in the process of reading comprehension, as it relates to speech processing.

The study, which was conducted at the School of Engineering, Trinity Center for Bioengineering, and Trinity College Institute of Neuroscience at TCD and the University of Rochester, New York, will be able to imply future directions in science focused on the finer details of parietal cortex functions related to the understanding of human speech.

Top Image: 3D illustration of human body organ. (Source: Adobe Stock By PIC4U)

References

Broderick MP, Anderson AJ, Di Liberto GM, Crosse MJ, Lalor EC. Electrophysiological Correlates of Semantic Dissimilarity Reflect the Comprehension of Natural, Narrative Speech. Current Biology.

Kutas M, Federmeier KD. Thirty years and counting: finding meaning in the N400 component of the event-related brain potential (ERP). Annual review of psychology. 2011;62:621-47.

Aydelott J, Jamaluddin Z, Nixon Pearce S. Semantic processing of unattended speech in dichotic listening. The Journal of the Acoustical Society of America. 2015;138(2):964-75.

No comment