Artificial intelligence (AI), in the forms of deep or machine learning, is apparently carving a niche for itself in the diagnosis of human cancer. More and more studies are demonstrating its potential in the detection of a number of tumour types.

For example, recent research has shown that AI can be used to identify skin cancer. Cancer diagnoses largely depend on the direct visualisation of medical images by human pathologists, who have trained for years to tell normal tissues from their diseased counterparts with extreme accuracy. However, it now seems that AI with the ability to make similar, highly sensitive judgements on the appearance of cells in these images to detect anomalies has been developed.

Artificial Intelligence and cancer detection

A recent study, published in JAMA, has reported on an example of these algorithms that appear to have out-performed specialists in their roles. This software had been ‘trained’ to detect lymphatic metastases in samples taken from women with breast cancer. The article written on the study claimed that its ability to diagnose metastasis was significantly superior to that of the pathologists included in the study.

This study was conducted at the Diagnostic Image Analysis Group and the Department of Pathology, Radboud University Medical Center; the Medical Image Analysis Group at the Eindhoven University of Technology; the Department of Pathology, and the University Medical Center in Utrecht. It was set up to pit 12 pathologists against a set of deep-learning algorithms.

These algorithms were developed in response to a research challenge, CAMELYON16, in which scientists competed to compile the AI that could best detect lymph nodes affected by metastatic breast cancer. All but one pathologist were given a maximum of two hours to tell images that showed metastases from those without, and to spot the specific locations , or foci, of the metastases in the images they nominated as ‘positive’. The last pathologist was given unlimited time to do the same. The pathologists were set to perform these tasks on a total of 270 separate image slides, 160 of which showed metastases. The algorithms were ‘trained’ to tell images showing these abnormalities from those that did not, and to spot foci. They were then exposed to 129 separate images, 80 of which were normal.

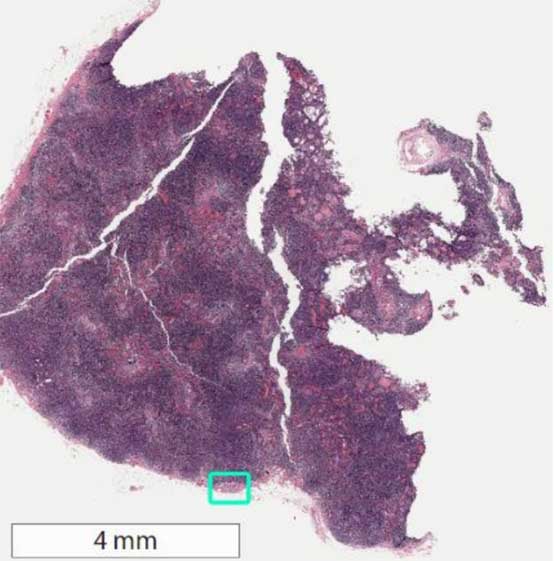

Whole-slide image of sentinel lymph node biopsy with area of metastatic breast cancer highlighted in green square. Image source: (methodsman)

The ability to identify metastases and foci were measured in terms of operator area-under-the-curve (AUC) scores, which also gave information on false negatives and positives. The analysis of the study’s results found that the pathologists under time constraints scored an AUC of between 0.738 and 0.884, with an average 0.810. The algorithms scored AUCs of 0.983 to 0.999 for the same task, and also recorded a significantly better average AUC (0.994) compared to these pathologists.

However, these scores were recorded by a sub-set of the ‘better’ algorithms as developed through CAMELYON16; the AUCs for all the algorithms used varied from 0.556 to 0.994. In addition, the ‘best’ algorithm recorded a true-positive rate for actual foci (approximately 72.4%, with 95% confidence interval and a range of 64.3% to 80.4%) that was comparable to that of the pathologists.

Integrating AI into medical diagnosis

This study suggests that AI should be integrated into the assessment of medical imaging in the diagnosis of lymph node metastasis. Therefore, the role of humans in this area may become diminished as a result. On the other hand, it should be noted that the deep-learning algorithms developed for purposes such as this study are only as good as the programmers who write them, and also depend heavily on the human expertise that produced the images and diagnostic criteria they are initially based on.

Therefore, AI still can’t detect abnormalities without human input to inform what they should be looking for, even though they can go on to develop more sophisticated methods of judgement over time. In addition, not all metastatic abnormalities look the same, or comply with the normal parameters of abnormality. It takes skill and education that is currently only available to human students. These points may be reflected in the greater variability (or range) in AUC scores among the pathologist group compared to that of all the algorithms.

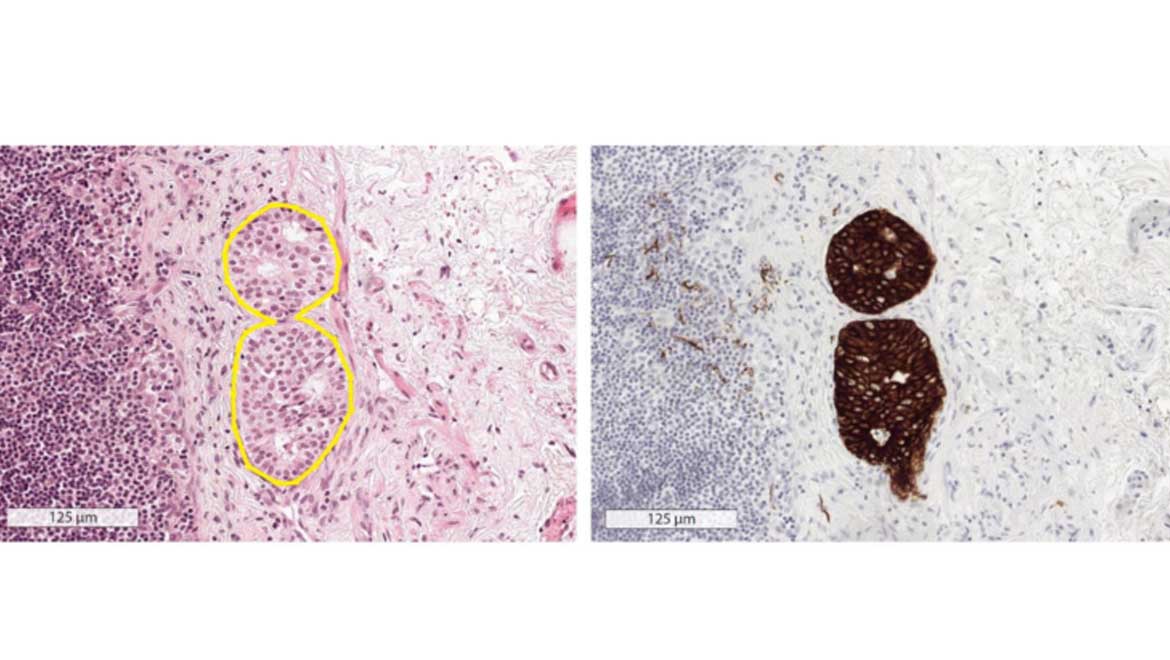

Another important issue highlighted by this article was the effect of time constraints on the pathologist AUC scores. The single pathologist who was not subject to this factor recorded an average AUC of 0.960 (range: 0.923 to 0.994) that were not significantly different to that of the best five AIs (0.966, with a range of 0.927 to 0.998). In addition, all scores in this study were validated by immunohistochemistry assays carried out on the same samples, which outperformed both AI and human detection for accuracy. Therefore, AI can be ultimately instrumental in streamlining pathologist imaging assessments, for example scanning for more conventional anomalies, thus leaving the doctor free to look for more atypical features while validating the ‘work’ of the algorithm. Adaptations such as these may reduce the rates of disease that goes undetected due to time pressure in diagnostic settings.

Research into the booming potential of AI as an apparent revolution in automatic diagnostic machine is growing in pace. On the other hand, these algorithms depend on their human operators for their accuracy, and are still equalled by pathologists who are not affected by time pressure (in this study, at least). Studies such as this need to be validated at increased scales before we can say human doctors can be replaced by machines.

Top image: Left: Hemotoxylin and eosin stain shows area of potential metastatic disease. Right: Immunohistochemical stain gives "gold standard" evidence of metastatic disease. Pathologists and AI agents only had access to H&E images. Image source: (methodsman)

References:

Ehteshami Bejnordi B, Veta M, Johannes van Diest P, et al. (2017) Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA. 318:(22). pp.2199-210.

Wilson FP. Artificial Intelligence Outperforms Pathologists in Diagnosing Metastatic Breast Cancer. Methodsman Blog. 2017. Available at: https://www.methodsman.com/blog/ai-breast-cancer

No comment