A new study demonstrates that electrical activity in the brain can be decoded and used to synthesize speech. This research may, one day, give a voice to the people who have lost speech by way of neurological disorders.

People robbed of the ability to talk, due to a stroke or another medical condition, may soon have the hope of regaining their voices, thanks to the latest research that harnesses brain activity to produce synthesized speech.

Experts said that the findings were compelling and offered hope in restoring speech.

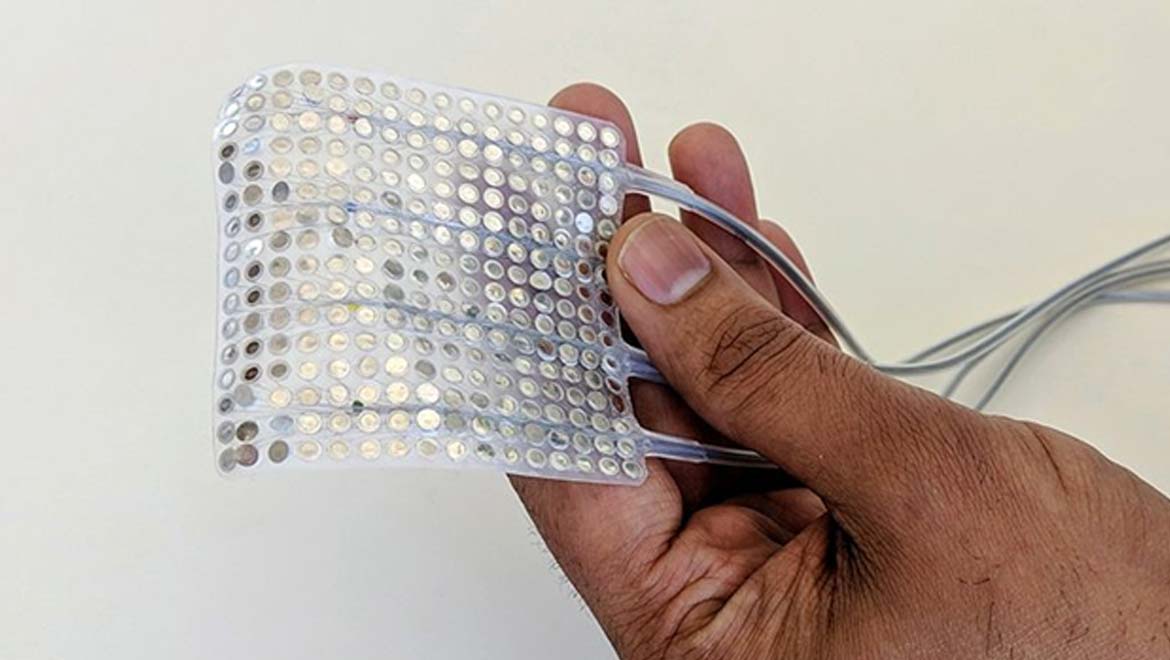

The study, published on Wednesday in Nature, reported data from five patients whose brains were already being monitored for epileptic seizures. Stamp-size arrays of electrodes were placed directly on the surfaces of the participants' brains.

The brain is the most efficient machine in the world, which has evolved over millennia, and speech is one of the hallmarks of behavior of humans. It is this speech-function that sets us apart from non-human primates.

"Very few of us have any real idea, actually, of what's going on in our mouth when we speak," said neurosurgeon Edward Chang, senior author of the study published in the journal, Nature. "The brain translates those thoughts of what you want to say into movements of the vocal tract, and that's what we're trying to decode."

Gopala Anumanchipalli, the co-lead author of the study, said that ALS patients could be one of those who benefits from this technology. He added, “The brain is intact, but the neurons, the pathways that lead to your arms, or your mouth, or your legs are broken down. These people have high cognitive functioning and abilities, but they cannot accomplish daily tasks like moving about or saying anything. We are essentially bypassing the pathway that’s broken down.”

People with paralyzing conditions, such as motor neuron diseases, often rely on technology to help them speak. (Source: BJ Warnick/Alamy)

How the Thoughts were Converted into Speech

To translate thoughts into sentences, Anumanchipalli and his colleagues used electrodes placed directly on the brain's surface. Though invasive, this direct monitoring was identified as the key to success. “Because the skull is really hard and it actually acts like a filter, it doesn’t let all the rich activity that's happening underneath come out,” Anumanchipalli said.

Once high-resolution data was collected, the researchers piped the recorded signals through two artificial neural networks, which are computer models that roughly mimic brain processes to find patterns in complex data.

The first network inferred how the brain was signaling the lips, tongue, and jaws to move. The second converted these motions into synthetic speech, training the model using recordings of the participants' speech.

This system worked quite well.

Listeners had problems with about 30% of the synthetic speech. Examples include hearing “rabbit” when the computer said “rodent.” There were also some misunderstandings with uncommon words such as, “At twilight on the twelfth day we’ll have Chablis” and “Is this seesaw safe?” These particular sentences were chosen as they included all the phonetic sounds in English.

Listeners perfectly transcribed 43% of sentences with a 25-word vocabulary and 21% of the words with one of 50 words. Overall, about 70% of the sentences were correctly transcribed.

Challenges Ahead…

Edward Chang, a UCSF neurosurgeon and study leader, has indicated that the next step would be to improve the quality of the audio. This improvement could make it more natural and understandable.

He also noted that the movements of the vocal tract were similar from person to person, which means it should be possible to create a “universal” decoder. Chang was quoted saying, "An artificial vocal tract modeled on one person’s voice can be adapted to synthesize speech from another person’s brain activity."

This implant is a long way away from being used in clinical practices. One reason could be that this technology requires inserting electrodes directly into the brain. Neuroscientist Marcel of the Carnegie Mellon University told STAT that this problem could be "a heck of constraint." However, he is currently working on noninvasive methods to detect thoughts.

Speech that is created by mapping brain activity to movements of the vocal tract and translating them to sound is more easily understood than that produced by mapping brain activity directly to sound, noted Stephanie Riès, a neuroscientist at San Diego State University in California.

According to experts like Amy Orsborn, a neural engineer at the University of Washington in Seattle, it seemed unclear whether the new speech decoder would work only with words that people think. She said that the work was really good for "mimed speech" but questioned what would happen if someone was not moving their mouth.

A Chicago-based neurologist, Marc Slutzky, agreed to Orsborn’s statement and said that the decoder’s performance would leave room for improvement. He added that listeners identified the synthesized speech by selecting words from a set of choices; as the number of choices increased, people had more trouble understanding the words.

The study “is a really important step, but there’s still a long way to go before synthesized speech is easily intelligible,” said Slutzky.

Top Image: This stamp-sized electrode array is placed directly on the brains of patients and can detect slight fluctuations in voltage as the individuals speak. A pair of neural networks then translates this activity into synthesized speech. (Source: UCSF)

References

Gopala K. Anumanchipalli, Josh Chartier & Edward F. Chang: Speech synthesis from neural decoding of spoken sentences. Nature volume 568, pages493–498 (2019)

'Exhilarating' implant turns thoughts to speech. Online available at: https://www.bbc.com/news/health-48037592

Scientists turn brain signals into speech, may help people who cannot talk. Online available at: https://health.economictimes.indiatimes.com/news/medical-devices/scientists-turn-brain-signals-into-speech-may-help-people-who-cannot-talk/69039158

No comment